As the vehicles are getting smarter and more automated, Advanced Driver Assistance Systems (ADAS) has become an essential part of the next-generation car. ADAS technology is designed to improve the vehicle’s safety and driving experiences. With fast-growing technologies of artificial intelligence and Vehicle-to-everything (V2X) communications, ADAS now can also help reduce traffic congestion and road accidents by connecting to road-side units (RSU) and cloud servers to facilitate intelligent transportation. ADAS enables better humans and autonomous vehicles to have better environment perceptions on the road, accelerating autonomous driving.

Driving and parking safety are two major ADAS features in vehicles, for collision warning and intervention, driving control and parking assistance. Besides, it’s a trend to move toward semi- and fully-automated driving, other driver assistance like driver monitoring, high-beam control, improved forward visibility at night or information relevant to the driver’s forward line of sight via head-up display are getting important.1

According to the research report of Fortune Business Insights, the global Advanced Driver Assistance Systems (ADAS) market is forecasted to grow from US$27.52 billion in 2021 to US$58.59 billion in 2028 at a CAGR of 11.4%.2 Yole’s research says, “Today, only 12.3% of the 1,300 million cars on the road worldwide are fitted with ADAS technology. This figure is projected to grow to 49% of the 1,800 million cars on the road by 2030. From 21% five years ago, ADAS is now fitted onto 65% of all vehicle production in 2022, and this number will reach 86% by 2027.”3 Roland Berger’s survey also shows that ADAS features will be ubiquitous by 2025 with 85% of vehicles produced globally featuring some level of driving automation.4

Key ADAS components

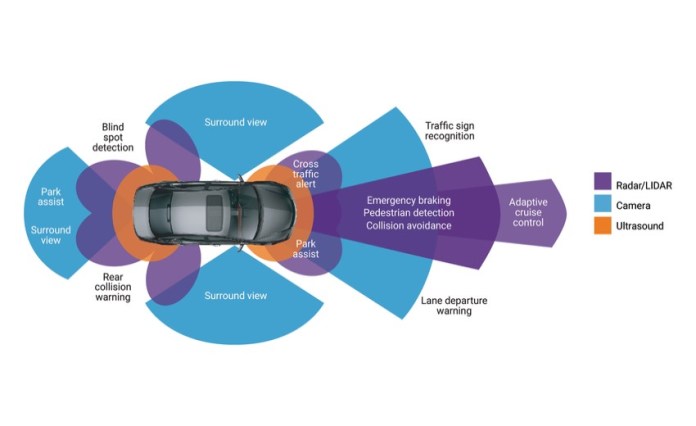

The vital components of ADAS include cameras, ultrasonic sensor, radar and LiDAR. Sensor fusion is adopted to enhance detection accuracy for automatic driving or intelligent transportation. Mobileye showcased its self-driving Robotaxi and overcame nigh-time driving with its environmental sensing technology enabled with cameras positioned around the vehicle, long- and short-range LiDAR sensors and a full array of radar sensors. The cameras, radar and LiDAR sensors operate in two independent subsystems – each serve as backups to each other instead of as complementary systems.5

Radar

According to Allied Market Research, “The global automotive radar market was valued at $4.08 billion in 2020, and is projected to reach $10.06 billion by 2028, at a CAGR of 12.6% from 2021 to 2028. The most lucrative segments are Adaptive Cruise Control (ACC), 77 GHz as well as short and medium range in terms of application, frequency and range respectively.”6 The key sensors used today on automobiles are radar and cameras, with ultrasonic technology playing a key role in short spans at lower velocities and LiDAR being utilized in autonomous driving. Radars are used to measure speed and range, and can accurately detect the distance of an object, and the long-range radar can offer distance calculations on objects that are as far as 300 to 500 meters.

The short-range radar (SRR) systems was frequently used for blind spot or lane departure detection. SRR and long-range radar (LRR) were used together to support automatic emergency breaking, adaptive cruise control and forward collision warning for automatic driving.

The market research firm Technavio indicates that the most used long-range radar sensor is of 77 GHz frequency. For short and mid-range there is more diversity with the presence of 22-, 77-, and 79-GHz radar sensors, which are gaining traction in the market. The research indicates that apart from the growing emergence of intelligent transportation, the increased accuracy in perceiving environment through sensor fusion technique is another major factor that is expected to boost the growth of the global automotive radar sensors market.7 One of the drawbacks is lack of visual information of the objects. The 4D image radar was developed to solve the issue. The new technology can create high resolution images that enhance the detection and classification of objects. NXP released a 4D image radar technology that can deliver concurrent 3-in-1 multi-mode radar sensing across short-, mid- and long-range operation, enabling the simultaneous sensing of a very wide field of view around the car. It delivers image-like fine resolution point clouds for simultaneous localization and mapping (SLAM) or demanding use cases, such as lost-parcel detection at distance.

Camera

Yole’s research shows that the primary ADAS technology workhorse so far is radar, which is expected to reach US$9.3 billion, and then camera modules including an image sensor, optics, and a camera enclosure will reach US$8.9 billion. In parallel, LiDAR, the long-awaited technology breakthrough for autonomy, will represent US$1.7 billion, while the vision processor which powers all the smart ADAS features will represent US$5.1 billion in 2027. Yole’s Imaging for Automotive 2022 report indicates that the deployment of cameras in the car will increase from 12 cameras now to 20 cameras, and imaging for automotive will reach $25 billion in 2027.8

Cameras are used for rear, front and surround view as well as e-mirror and driver monitor system. Cameras are positioned outside the vehicle on the front, back and sides to capture images of the road, street signs, pedestrians, vehicles, and other obstacles. Camera makers are incorporating cloud and AI technologies along with the T-box or car PC to overcome the camera’s limitation and provide advanced ADAS features like multi-lane and multi-object detection or eye-tracking. Connecting to the cloud, the cameras can not only analyze the images, but also trigger a response to improve safety like automatically initiating emergency braking or alert there’s a vehicle in their blind spot or lane departure, and further realize teleoperated driving in the future.

Chimei Motor Electronics released a blind spot assistance system, embedded with AI image recognition technology,” that can ensure operate normally at night or no-light environment with invisible IR, and operate under 80mm/h rain testing with its special gravity diversion design. It can set different levels of warnings for different “protected object”, and can detect and track them it is still or shielded by bushes or public facilities. Besides, it offers dual screen output of “blind spot top view” & “the view of side mirror”.9

Thermal image cameras are a mature technology to be frequently used as a “car night vision system” in conditions of low visibility or complete darkness due to their independence from ambient light levels. The thermal imaging technology ensures precise all-day detection regardless of poor climate and low light conditions. Coupled with AI technology, they can provide reliability and redundancy required for autonomous cars.

Driver Monitor System (DMS) is one of major applications for the in-vehicle cameras. TrendForce’s statistics shows that the camera-based DMS (driver monitoring systems) will undergo an explosive growth with a 92% CAGR in the 2020-2025 period.10 The revised General Safety Regulation requires that all new vehicle models introduced on the European market are equipped with advanced safety features, such as technology to detect drivers’ drowsiness and distraction as of July 2022. Euro NCAP specifies driving monitor is a must for EU 5-star certification in 2020, and in-car child detection for Child Presence Detection (CPD) is scoring from 2023, expanding monitoring and warning of drivers to detecting a child’s presence in the vehicle and alerting the vehicle user or third-party services.11 Those are seen as driving forces for the camera deployment in the car.

LiDAR

LiDAR technology detects objects and maps their distance, and can detect objects at distances ranging from a few meters to 1000 meters. The automotive LiDAR system uses pulsed laser light to measure the distance between two vehicles by illuminating the target and measuring the reflected pulses using a sensor. Semi-autonomous and fully autonomous vehicles are one major driving force for the market growth as they increasingly use LiDAR sensors for generating huge 3D maps for 360° vision and for accurate information to assist in self-navigation and object detection.

Allied Market Research’s report indicates that the global automotive LiDAR market was valued at $221.7 million in 2020, and is projected to reach $1,831.9 million by 2028, registering a CAGR of 30.3% from 2021 to 2028. Mechanical and long-range LiDARs are projected as the most lucrative segments.12

LiDAR is key enabler for autonomous cars; however, high cost hamper the automotive LiDAR market growth and mass adoption compared to the traditional systems such as RADAR and cameras. The overall cost of a LiDAR system, including high-end hardware, sensors and scanners is approximately between $20,000 to $75,000. In addition, the post-processing software, such as point-cloud classification, might require third-party software, which could cost around $20,000 to 30,000 for a single license.

LiDAR is not so widely adopted due to its high costs. To boost the market growth, leading vendors and startups in automotive LiDAR industry are primarily focusing to reducing costs, and to enhance durability, performance and range of LiDARs. For instance, market vendors are focusing on the development of next-generation LiDAR sensors with continuous-wave frequency modulation (CWFM) technologies which help determine both velocity and the distance of the target. Unlike conventional LiDAR, CWFM LiDAR sensors are immune to background light and do not get affected by the presence of other light sources transmitting at the same frequency. Besides, more manufacturers eye on gallium-based LiDAR becuase it has reduced the cost of sensors in recent years. With regard to optimize the performance, LeddarTech and Continental have actively developed new technologies. Leddar recently launched the Pixell 3D flash lidar module, with 180-degree field of view (FoV) and designed for ADAS and autonomous driving applications, is optimized for proximity detection and blind spot coverage in urban environments. Continental works with AEye to develop HRL131 long-range LiDAR, providing precision sensing technology to enable the future of autonomous mobility. The HRL131 is a microelectromechanical system-based (MEMS) adaptive LiDAR for Level 3 and Level 4 assisted and automated driving solutions, featuring a high dynamic spatial resolution with long-range detection for high-speed highway scenarios or densely packed urban roads.

Reference:

- https://www.sae.org/binaries/content/assets/cm/content/miscellaneous/adas-nomenclature.pdf

- https://www.fortunebusinessinsights.com/industry-reports/adas-market-101897

- https://www.i-micronews.com/automotive-industry-a-massive-ai-powered-transformation-is-ongoing/

- https://www.rolandberger.com/en/Insights/Publications/Advanced-Driver-Assistance-Systems-A-ubiquitous-technology-for-the-future-of.html

- https://www.intel.com/content/www/us/en/newsroom/news/mobileye-avs-now-driving-full-sensing-suite.html#gs.1gjvlz

- https://www.alliedmarketresearch.com/automotive-RADAR-market

- https://www.businesswire.com/news/home/20181212005506/en/Global-Automotive-Radar-Sensors-Market-2018-2022-21-CAGR-Projection-Over-the-Next-Four-Years-Technavio

- http://www.yole.fr/Imaging_For_Automotive_March2022.aspx

- https://www.taiwanexcellence.org/en/award/product/1110634

- https://www.trendforce.com/presscenter/news/20201016-10514.html

- https://cdn.euroncap.com/media/64101/euro-ncap-cpd-test-and-assessment-protocol-v10.pdf

- https://www.alliedmarketresearch.com/automotive-lidar-market